In the rapidly evolving landscape of artificial intelligence, models that can understand both visual and textual data are transforming how machines interact with the world. Google’s latest advancement, PaliGemma 2, stands out as a groundbreaking vision-language model that reshapes what is possible in multimodal AI systems.

With a focus on open access, lightweight architecture, and state-of-the-art performance, PaliGemma 2 is not just another AI model—it’s a leap forward in how machines interpret complex information. This post explores what makes PaliGemma 2 unique, why it matters, and how it’s redefining the standard for vision-language models in both research and real-world applications.

Understanding PaliGemma 2

PaliGemma 2 is a multimodal model developed by Google DeepMind that merges two key components: a vision encoder and a language model. Together, these elements allow the model to process images and generate meaningful textual responses. It means the model can caption images, answer questions based on visual input, and even understand complex scenes in natural language terms.

Unlike many closed, resource-heavy models, PaliGemma 2 belongs to the Gemma family, which focuses on creating smaller, open-weight AI models accessible to a broad range of users. With this release, Google is pushing the boundaries of what open-source multimodal systems can achieve.

The Role of Vision-Language Models Today

Vision-language models are designed to understand and connect visual data (like images or video frames) with textual information. These models have found applications across multiple sectors:

- Healthcare: Reading X-rays and generating clinical reports

- Retail: Tagging and categorizing product images automatically

- Accessibility: Describing visuals to users with visual impairments

- Education: Providing visual explanations alongside text

- Content Moderation: Detecting harmful or inappropriate images with contextual understanding

By combining sight and language, these models enhance how AI systems interact with the human world, offering a more natural and intuitive interface.

How PaliGemma 2 Redefines Multimodal AI?

PaliGemma 2 is not merely an upgrade; it’s a redefinition of what lightweight, open-vision-language models can accomplish. It introduces several technical and strategic shifts that make it accessible without compromising performance.

Key Differentiators of PaliGemma 2

Below are some of the critical innovations that set PaliGemma 2 apart:

- Open-source availability: With freely available weights and architecture, developers and researchers can fully explore, adapt, and build upon the model.

- Lightweight design: PaliGemma 2 is optimized to run efficiently on standard hardware, including consumer-grade GPUs and CPUs.

- SigLIP-based vision encoder: It uses a refined image encoder that produces high-quality visual embeddings, which improves downstream tasks like captioning or object recognition.

- Language modeling with Gemma: The model uses the Gemma language architecture to ensure fast, accurate generation of text from visual prompts.

- Competitive benchmarks: Despite being smaller and open, PaliGemma 2 performs comparably to many closed-source models on key evaluation benchmarks.

These improvements are crucial in making advanced multimodal AI more inclusive and applicable beyond the research lab.

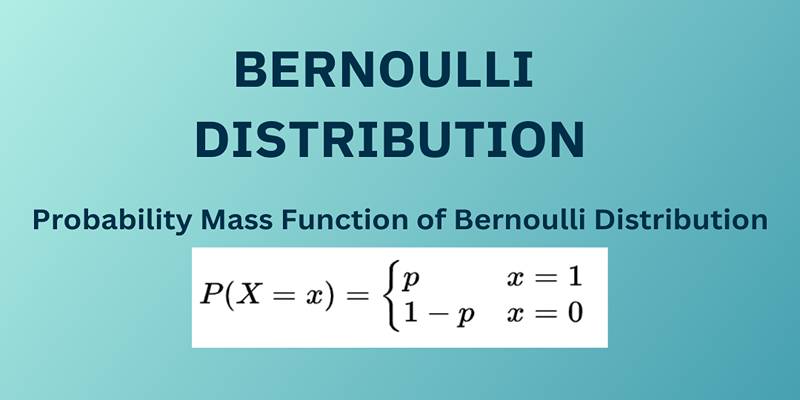

Architecture Breakdown: How PaliGemma 2 Works

The core strength of PaliGemma 2 lies in its modular but tightly integrated design. It consists of:

- A SigLIP vision encoder converts images into dense vector embeddings that capture the image’s meaning.

- A Gemma language model uses these embeddings to generate responses, answers, or descriptions in natural language.

The data flows smoothly from the image input to the final textual output, with special layers in between for alignment and attention control. This setup enables the model to generate answers that are both accurate and contextually relevant.

Practical Use Cases of PaliGemma 2

Thanks to its open design and efficient performance, PaliGemma 2 can be deployed in a variety of real-world scenarios. Some prominent use cases include:

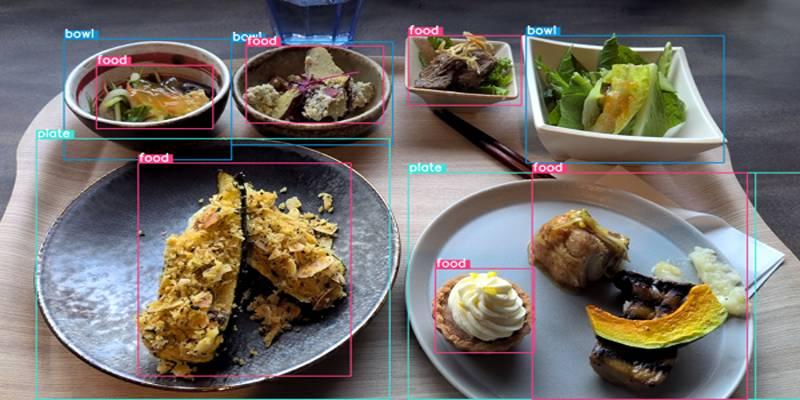

- Visual Question Answering (VQA): Answering questions based on an image, such as identifying objects or describing scenes.

- Image Captioning: Generating accurate and descriptive text captions for photos and graphics.

- Object and Scene Understanding: Recognizing not just individual elements but also how they relate to each other contextually.

- Multilingual Visual Input: Supporting translation and understanding across languages for visual content.

- Smart Assistants: Powering AI tools that need to both see and speak, such as robotic systems or voice assistants.

Performance Benchmarks

When evaluated on standard datasets, PaliGemma 2 showed impressive results for its size:

- COCO Captioning Dataset: Achieved near state-of-the-art scores in generating detailed image descriptions.

- VQAv2 Dataset: Delivered accurate answers to complex image-based questions.

- RefCOCO: Demonstrated strong grounding performance in identifying specific elements within scenes.

Despite being smaller and more accessible, PaliGemma 2 manages to challenge much larger proprietary models in real-world tasks.

Open Access and Developer Readiness

Google’s decision to release PaliGemma 2 with open weights ensures that AI development becomes more democratized. The model is already available on popular platforms such as Hugging Face and can be integrated into machine learning pipelines using frameworks like PyTorch and JAX.

Developers and teams can get started with minimal setup:

- Download the model From Hugging Face or Google repositories.

- Install dependencies: Using Python environments and common libraries.

- Feed in image and prompt: Get a contextual text output in seconds.

- Run locally or on the cloud: With no dependence on high-cost infrastructure.

This approach lowers the barrier to innovation and allows startups, educators, and researchers to explore advanced AI without significant investment.

Conclusion

PaliGemma 2 is more than just an iteration—it’s a redefinition of what vision-language models can be. By prioritizing openness, speed, and versatility, it challenges the norms of multimodal AI and invites a wider community to participate in shaping the future. For developers looking to build smarter applications, researchers studying cognitive systems, or companies aiming to deploy AI efficiently, PaliGemma 2 offers a compelling and practical solution. As vision-language intelligence becomes central to modern AI, models like PaliGemma 2 prove that excellence doesn’t have to come at the cost of accessibility.